🤖 AI Development: Full Speed Ahead or Pump the Brakes? The Great Debate

🧠 Why are the brightest minds in AI pushing a 6 month pause, while others say it doesn't go far enough? 🤯

Hello Creative Technologists!

Today, we’ll be deep diving into the hottest debate of the week — is AI development and deployment moving too fast for our own good?

No doubt, AI is the new space race and arms race — with the public and private sector running at full force towards the next big AI breakthrough.

More critically, these AI systems are being productized and deploying into the wild at a speed never seen before. Timelines are shrinking, and anxiety is in the air. Do we pump the breaks, or go full speed ahead?

Today we’ll explore both sides of the argument — and I’ll leave it to you to draw your own conclusions. Because, as is often the case with complex topics — the answer isn’t so obvious. Let’s get into it!

A frothy debate after 2 big weeks for AI

A modicum of relief was bestowed upon us this past week, after a two-week period riddled with launch-after-launch of the most advanced AI capabilities the world has ever seen.

The outcome? Unprecedented AI power to the people:

But is it too much power?

Eliezer Yudkowsky certainly thinks so. To him the letter pushing for a 6 month pause, signed by the likes of Gary Marcus, Elon Musk and Steve Wozniak simply didn't go far enough; even trivialized the issue.

Controversial headlines aside, let's dig into his argument:

To Eliezer, AI is *far* beyond nuclear 🤯

He argues that instead of a paltry 6 month pause, we need an *indefinite* worldwide moratorium on large AI training runs, shutting down large GPU clusters, and imposing limits on the compute used for AI training.

Eliezer's approach is simple:

Strictly regulate and track GPUs and AI hardware.

Gradually lower the ceiling on computing power used for AI training as tech advances.

No exceptions for governments or militaries. Humanity as we know is at stake.

Non-compliance? Airstrikes 💥

Are we moving from the era of bombing caves to bombing data centers?

Truly feels like a sci-fi movie where rogue entities and their GPU clusters are targeted for elimination.

But might there be some credence to these concerns? 😅

The big data era (that preceded the current AI era) digitized everything, creating treasure troves of data — both in public and private stores.

Data is the new oil, and with AI, extracting this crude resource is easier than ever.

Critically, wielding this power isn't limited to big tech giants or nation-states anymore. Anyone can use it for good or evil.

People have already shown wild capabilities.

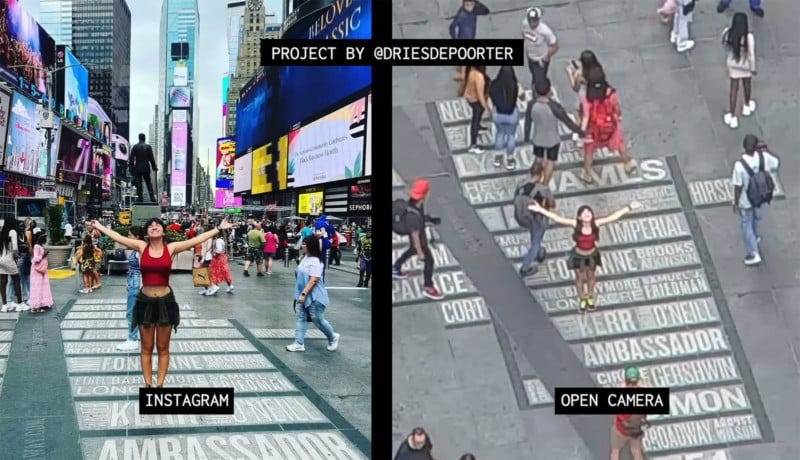

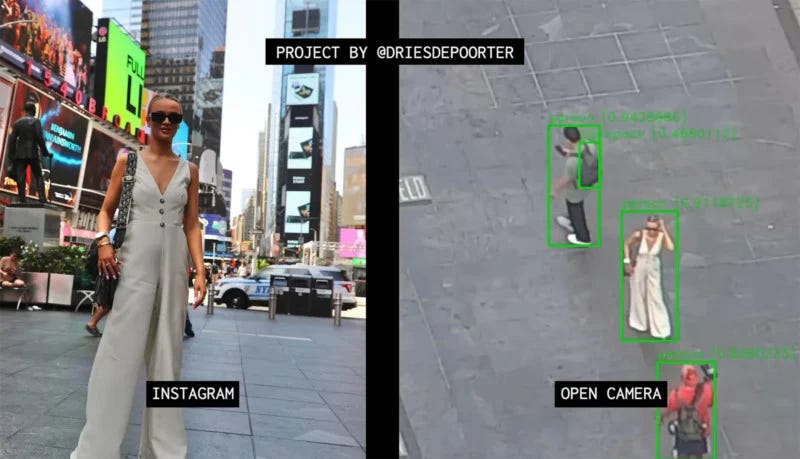

Like this AI artist who geolocated top influencers using Instagram & publicly available camera feeds.

This type of "pattern of life analysis" or spatiotemporal analysis has been relegated to the intelligence community.

Now imagine adding GPT-4 to the mix…

It's no surprise that OpenAI has been selective about opening up multi-modality.

Sure, we've been able to run detection & tracking algorithms on media.

But combined with the "reasoning" ability of GPT-4 class models e.g. to parse social feeds -- you get big brother in a box.

Perhaps certain types of models should be more regulated than others?

IMO, image generation has far less downside than general-purpose models like GPT-4, especially when they're multimodal.

The potential for misuse is much higher with models that are of GPT-4's capability:

But it isn't exactly rainbows and sunshine either. VFX and photoshop has always been a thing, but image models enable disinformation at an unfathomable scale.

My explicitly labeled VFX videos fool millions. Now imagine what bad actors will do?

Prevention vs Firefighting?

Detecting synthetic media will need to become a continuous patching process, similar to addressing vulnerabilities and zero-day exploits.

Critically, these aren't hypothetical "some day" problems. They're here and now:

To address disinformation, big tech players can act fast, spin up large teams, train discriminators, and play the game of whack-a-mole.

But what will small to medium businesses do?

As bad actors use these tools maliciously in new ways, we may see dire consequences this year.

Extremely Consequential Extremes

The AI debate has two extremes: treating it like nuclear, or leaving it open.

Some argue that open-source AI allows (the disproportionate) good actors out there to police the (smaller number of) bad ones, minimizing the harm.

Further fearing that regulation would only benefit incumbents, and could lead to a centralized power worse than Orwell’s wildest imagination:

But open diffusion models are one thing. Open source LLMs could enable hackers to create disposable apps & scripts, amplifying potential harm when gaining access to someone's credentials.

This is a more worrisome scenario than democratized deep fakes.

I'm starting to understand why some might call for a pause in AI development, and others might call it a doomer assessment. Both are valid.

As GPU clusters become more efficient and accessible, it becomes harder to police their usage without extreme surveillance on every device.

Requiring OS-level restrictions to prevent rogue AI creation seems like a dystopian outcome, but the potential downsides of unrestricted AI development are hard to predict. Sounds very 1984 eh?

Of course, vested interests also play a role in AI development.

OpenAI for example, calls for national authorities to scrutinize large training runs.

I wonder who this would impact the most? Might it be their biggest competitors -- current or future?

This creates a stock market war-like situation, with companies racing to launch AI products. Ethics teams may be sacrificed in the process, further complicating the situation (e.g. Microsoft)

Regardless of where you fall on the spectrum of "treat it like open source" to "treat it like nuclear" -- we're entering an era where we need to hold two opposing ideas in our head.

As Linus Ekenstam likes to say - it's ok to be excited & scared simultaneously.

Does the answer lay in the balance? ⚖

What's the right level of the stack to enforce AI regulation?

How long until someone makes a principled second amendment style argument for "the right to own GPUs"?

Share your thoughts and let's debate it in this online public square! 🗽

One Last Thing:

I spend a non-trivial amount of time creating threads like this, and I’d like to keep ‘em coming. It would really help me out if you:

- RT this thread on Twitter to share with your audience (it really does help!)

- Follow @bilawalsidhu for more creative tech magic (and thanks for 18k!)

- If you aren’t already, sign up to get this Creative Tech Digest sent neatly to your inbox:

Bilawal Sidhu signing out — see ya’ll in the next one!

I like this series

Fully encapsulates your Twitter Space.