Depth2Image AI Use Case Round Up

/imagine reskinning real & virtual worlds with your imagination

Hey Creative Technologists — welcome to another AI round up!

Previously, we’ve covered the potential of depth2img to maintain structural coherence when making AI art. As a quick refresher:

Now, let’s take things a step further and explore concrete use cases, and evaluate the strengths and weaknesses of this technique in action.

Reskinning Places & Objects — Both Real & Virtual

With Depth2Image, the structure depicted in your image is the guide. What’s important is you block out your scene, the elements in it, and take a few photos. Critically, it doesn’t matter how you make this image i.e. if it’s real life or a synthetic image made with simple primitives in a 3D tool like Blender.

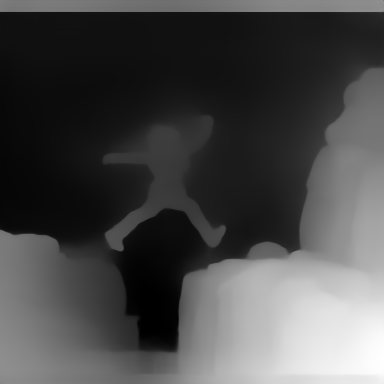

Whether you infer a depth map or export a synthetic one, it’s all the same thing. Think of your input images in this Depth2Image workflow akin to a “3D” rough sketch — also called “greyboxing” in the game development world. Once you’re happy with a image reference, run it through depth2img and voila:

Let’s start by making a virtual environment. Here are some fun results I explored while creating a new background for my video conferencing sessions. Of course what I wanted is my own personal holodeck:

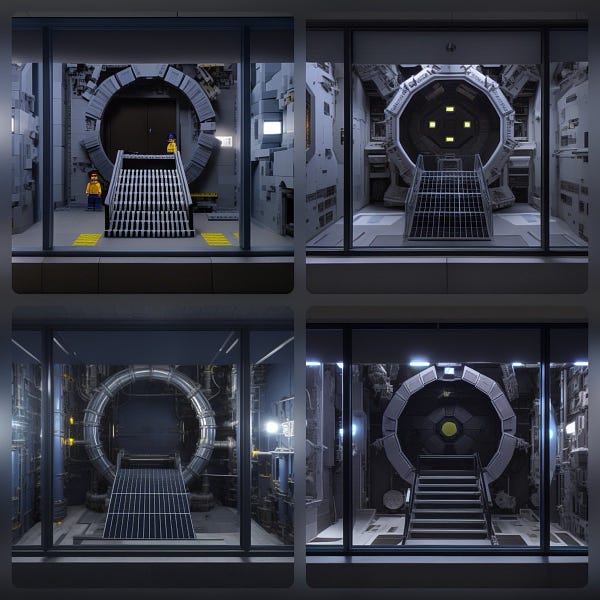

Now let’s consider the more complex composition below — you’re standing inside a control room, looking out the window to see a massive stargate ring, with a ramp leading up to it. Plus all the intricate details layered on top of it.

Achieving this type of an output consistently would be hard to nail, even with complex prompt weighting and construction. By using depth to guide the image generation process, we can easily try completely different aesthetics - from a sci-fi reactor to a Lego reprise while retaining the essence of the scene

Whether you’re reskinning your game level or bedroom, depth is such a powerful way to provide concrete guidance to the sometimes chaotic AI image generation process.

But this technique can be practical too. Need to remodel your room? Get a massive pinterest board customized to your own needs. Having launched ARCore APIs in the past, it blows my mind that this workflow to build a AR shopping app doesn’t really need ARCore or ARKit.

Got scale models? Snap a photo with your phone and use depth2image to reskin them. Use random things you have laying around to “greybox” in real life — think like an architect… Because IMO this is basically a prototype for a future XR application :)

Of course, you can also apply depth2image to video to get some fun results. In this case, I used a NeRF capture of a seal I came across on the SF embarcadero. To add some spice, I made a fun animation, dramatically playing with the field of view to get some interesting perspective shifts.

Taking things a step further, you can also exploit geometric understanding to selectively change aspects of the scene, and elements contained within it. Think semantic segmentation + depth aware diffusion, like the following:

Not Just Places & Things — People Too!

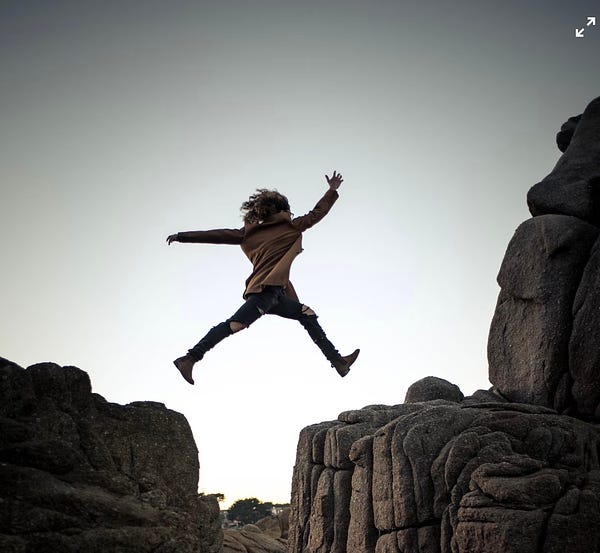

If you like the composition of a shot — like this one here, you can easily reskin it and swap the entities depicted in this world to what you really want, which is obviously elmo taking the leap to live his dreams. So you could go to any stock image site and grab something. Heck, go into your own camera roll and grab something that tickles your fancy as a reference composition.

Naturally, we have to talk about synthetically generated 3D characters as well. In this case Blender Sushi has rendered out a short CG animation (unsurprisingly using Blender). This example beautifully illustrates how you can try so many different styles reskinning this character while keep the coarser geometry of the character fairly consistent.

A unique use case of depth2image is making digital humans — wherein you can take live action footage, or “low quality” synthetic 3D renders, and “up res” them using depth guided img2img. Check out these results:

How about a more cartoon-ey result this time? Like a high quality GTA cover. Kind of looks like a scruffier Jason Statham 😁

Now let’s flip it. Here, Jesse nicely illustrates using screenshots from GTA 5 to compose a scene, then making it photorealistic using depth2image. How crazy is that? Using AI to take a game from 2013 to resemble real world imagery. Talk about breathing new life into decade old game.

Synthetic imagery as an input for Depth2Image is even more perfect for animation. In the example below, Blimey shows how you can use a cheap, real-time 3D tool to quickly compose your scene and animation, then adding a far more eye catching hand drawn style using depth2img. It’s kind of like cell shading on steroids… Except, you can apply cell shading to anything you can image with a camera or rendering engine.

Of course since we’re using 2.5D depth maps to guide the diffusion process, we can also take the output 2D image from depth2image, and project it back into 3D space — like in the following example by Stijn:

Depth2img is fun — And I enjoyed making this round up of all the cool stuff creators are making with depth2image. You might be surprised how much structure can be conveyed with a single depth map image. but remember it is called 2.5D for a reason. Go too far outside the camera frustum of the depth map, and it won’t really work.

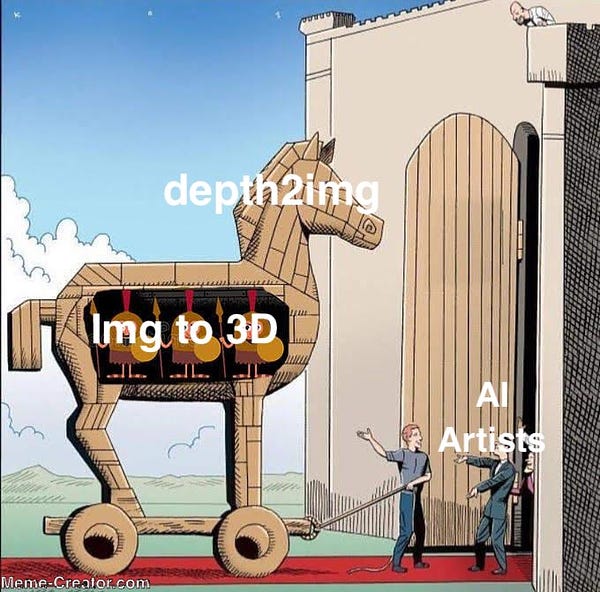

But, let’s be honest… really where all of this stuff is going is… well, 3D. And that is the perfect subject for a future round up.

Cheers,

Bilawal Sidhu PS: Check out the creative technology podcast

Gosh! AI is getting better and better by the day.......

Keep your posts coming.......