🧠From Uncanny Valley to Photorealism: The Game-Changing Leap of Generative AI Models. Have We Shattered the Visual Turing Test? 🤯

GenAI models like Midjourney and Stable Diffusion have hit the threshold of photorealism. Let's explore what these breakthroughs mean for 3D, VFX and creation as we know it.

Hello Creative Technologists!

Today we’ll summarize the remarkable leap generative AI models have taken just in the last 3-6 months.

Then we’ll examine how Gen AI will infuse itself into 3D rendering and VFX workflows in mid to long term.

But first, let’s summarize recent developments in generative AI:

For a better half of 2022, creators have been able to use tools like Stable Diffusion and DALL-E2 combined with a range of in-painting and post-processing tools to create images that look indistinguishable from reality.

Starting 2023, with the rise of ControlNet and composable LoRAs technical artists have been able exert greater artistic control over Stable Diffusion to get even more photorealistic results.

Midjourney v5 has now made that multi-step process a single, well defined text prompt. No need to wrangle multiple components and passes together.

Yet with it’s Discord interface, Midjourney lacks the creator-centric UX of Adobe Firefly, but touting immaculate dynamic range, with improved natural language prompting — it’s the best model out there, right now*

*v5 wins prompt-for-prompt as of March 26, 2023 (dates are crucial in AI!)

Okay! Now let’s dive into Midjourney & Stable Diffusion — and the massive advances they’ve made in coherent photorealistic image generation

First off, Midjourney v5 is far more photorealistic out-of-the-box. Where as it's predecessor has a more painterly, stylized bent.

Click through the above to watch a thorough comparison of v5 vs v4 incase you want to go deeper. Otherwise, let's keep going...

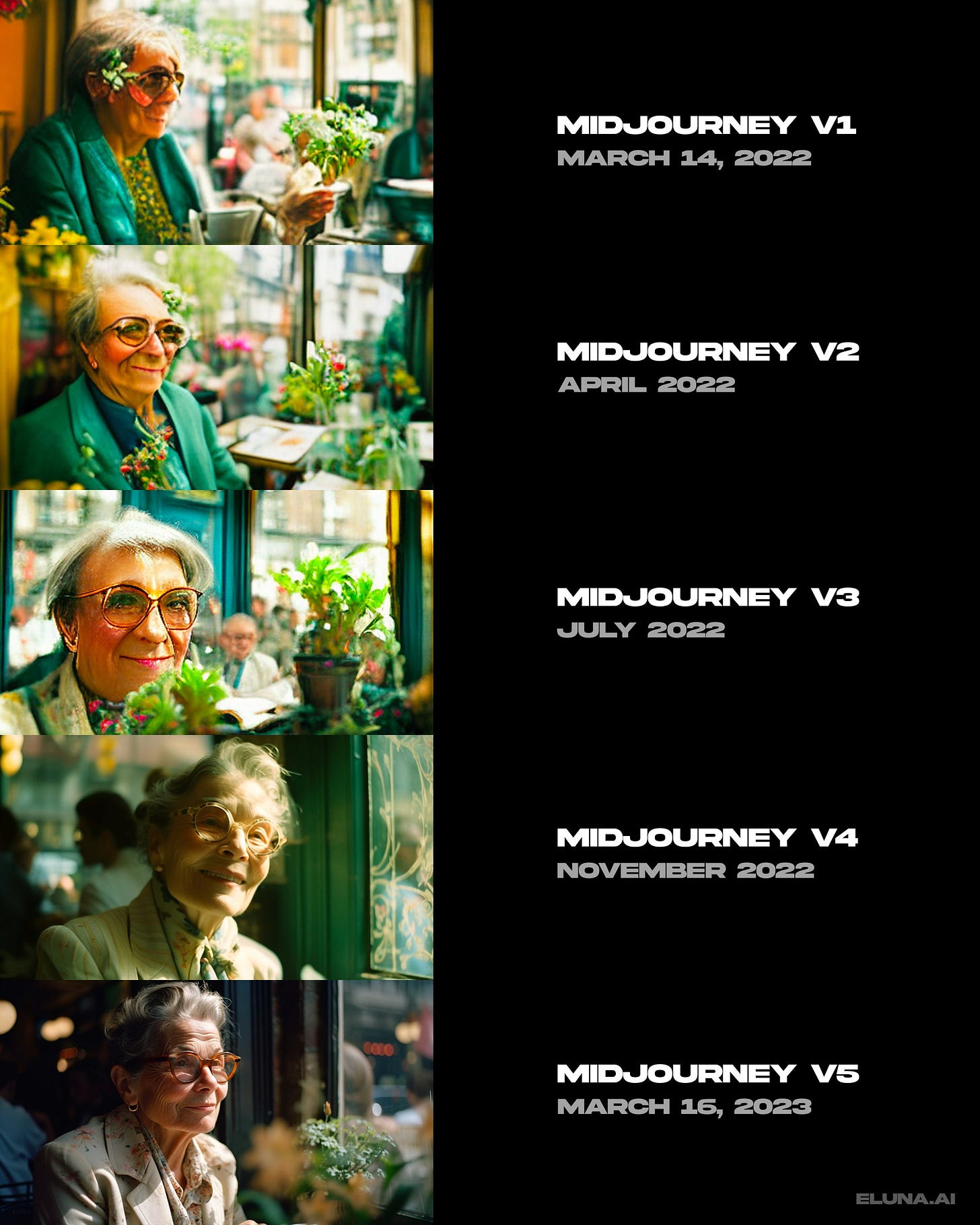

Not to put too fine a point on it, but collaged below is a progression of the same prompt in Midjourney from v1 through v5. Astonishing progress/time:

With v5, Midjourney has crossed the chasm of uncanniness, and is well into photorealistic territory.

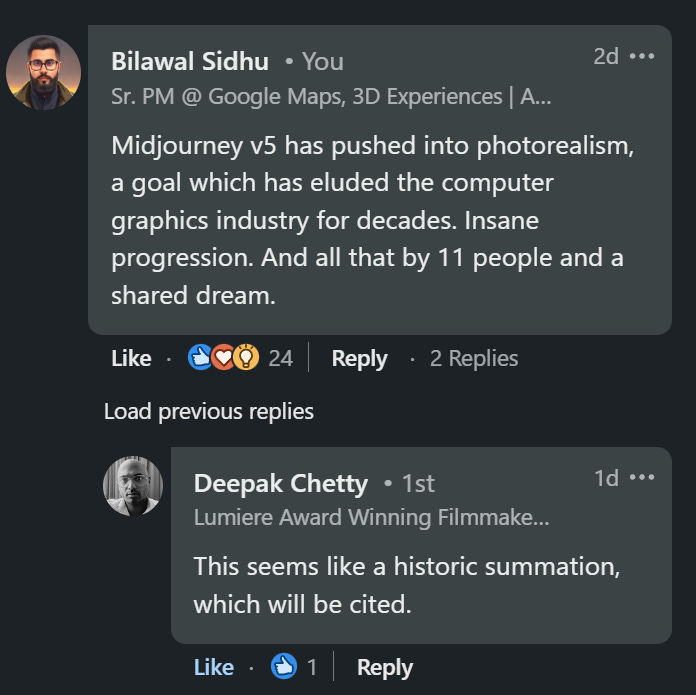

And this feeling is resounding amongst professionals. Some might even say it's one for the history books!

I mean how can generative AI *not* absolutely disrupt 3D engines like Unreal & Unity, or even Octane & Redshift

Just look at the quality of this Midjourney generation by Linus 🤯

Like, who knew it'd take generative AI to cross the uncanny valley, particularly for digital humans? No sub-surface scattering required!

You've got all you need to realize your ambitious Bollywood dreams:

Virtual sets? Not a problem. These Midjourney generations easily surpass the quality of an Unreal Engine or Octane render.

I mean, just look at the high frequency detail in the chair, the knitting, the windows -- and good lord (!) the dynamic range is immaculate

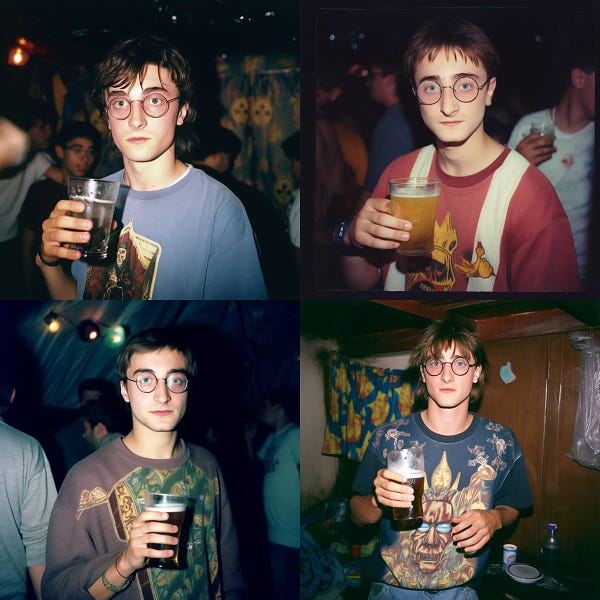

Obviously, the meme potential is exceedingly high too :)

Especially given MJ's new approach to prompting which allows us to compose complex scenes with multiple characters.

Fancy a Hogwarts rave circa 1998? No problemo:

Product photography gets a huge boost too. Imagine products before you create them, or fine tune models with actual product photography to stage virtual shoots on demand.

Doing this in the past has required scanning assets, or modelling them from scratch, plus hours in 3D tools tweaking textures, lighting, animation, edits.

It's no surprise that Jensen Huang CEO of NVIDIA said “Every single pixel will be generated soon. Not rendered: generated”

Obviously, I've been saying this for a hot minute now, especially after playing with ControlNet:

In the near term, we will see hybrid approaches that fuse the best of classical 3D + generative AI will reign supreme.

Run a lightweight 3D engine for the first pass, then run a generative filter on top to convert it into AAA quality.

Think NVIDIA's "DLSS" on steroids — and i’m not kidding when I say it’ll be like channel surfing realities:

Key point is — 3D’s got a good foundation. But with the rise of generative AI, explicitly modelling reality seems overrated for many visualization use cases. Instead, a hybrid approach absolutely crushes it!

E.g. throw in an uncanny looking Unreal model, use it to drive the performance. Then run it through a generative AI “filter” and get out a much more photorealistic result. Minor temporal inconsistencies aside (which'll be solved!) the result is beyond Unreal:

Video is in it's infancy, but clearly the next target. Jon made this short film with a freaking iPhone + Midjourney + Runway.ml Gen-1

And it's all filmed in his apartment! This is James Cameron style virtual production ($$$) democratized.

Imagine where we'll be in +6 months...

Obviously, Midjourney's David Holz has always been aiming towards transforming interactive content. Stability’s Emad Mostaque wants to run his visual engine at 60 fps. Just there is massive progress, yet many more will enter the fold.

To put it succinctly — Generative AI will first it'll transform ideation, then asset creation, then 3D engine embellishment, but eventually -- we'll be playing dreams in the cloud 🌥

And I for one, can't wait!

That's a wrap! If you enjoyed this deep dive on AI's impact on real-time 3D & offline VFX:

- RT this thread on Twitter to share with your audience (it really does help!)

- Follow @bilawalsidhu for more creative tech magic (and thanks for 15k!)

- Sign up to get these Creative Tech Digests sent neatly to your inbox:

Bilawal Sidhu signing out — see ya’ll in the next one!

Not only is Midjourney v5 capable of generating photoreal humans, it is capable of generating photoreal humans of multiple ethnicities! I used to try making Indian characters using MJ v3 and I would get the same faces over and over because of probable limitations in its training dataset. Now with v5, the variance in its character generation is really amazing. Check out a few of the examples I was able to generate:

https://futuretelescope.substack.com/p/my-money-where-my-mouth-is

It isn’t just game-changing – it’s life-changing.