🧠 Why GenAI Lost Its Spark: The State of Creative Tech in 2023

Breaking Down the Hype and Reality of AI in Creative Technology

🚀 Creative Technology Digest

Your weekly dose on the future of creation and computing.

🍇 Juicy Topic Of The Week: GenAI felt like magic a year ago. Now it feels incremental. Has the creation revolution hit a plateau?

Remember the seismic shift we felt in the middle of last year when AI breakthroughs like DALL-E 2, Stable Diffusion, and Midjourney burst onto the scene? It was a moment when technology didn't just assist — but amazed, turning skeptics into believers. Fast forward to today, and the landscape feels different. The progress, once meteoric, now seems incremental. So, what's happening? Is the allure of creative tech fading, or are we simply getting used to living in a world where magic is the new normal?

🌶️ Spicing It Up: A Critical Lens on AI Creation Startups

Case in point: I came across this fair, albeit spicy critique of AI creation startups — so let me build on it 🌶️

Every SD-based AI startup business model starterpack

- Inference

- Txt2img

- Img2img

- ControlNet (New !)

- AnimateDiff (New !)

- Chatbot

- Model Marketplace (Still can't beat HF)

- LoRA Training (Trending !)

- Model merging (Soon ?)Honestly, I'd like to see a… twitter.com/i/web/status/1…

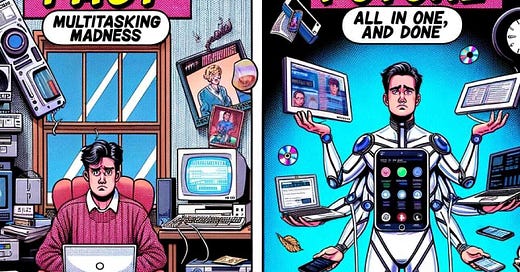

I largely see startups tackling AI creation tools from two angles:

1) Specialized Unbundling: taking the problem space of creation itself and unbundling it — tackling modalities like text, image, voice, audio, video, 3D etc in isolation. Then refining stickier workflows that emerge (e.g. image to video or video to 3D). But you fundamentally need to use such a tool with “classical” creator tools. It isn’t a replacement as much as an addition to the tool belt.

2) Trend-driven Adoption: taking AI creator workflows that emerge organically around open source models + tools like A1111/ComfyUI and making it a couple of clicks versus a weekend of wrangling. Adoption (and revenue) spikes around a trend, and people move onto the next thing and the next workflow wrapper.

Few are focused on reimagining creation all together - orchestrating all the modalities in concert with each. They’re out there, but they are few and far in between. Unsurprising, given this is a lot to chew off at once, especially for a startup.

Meanwhile incumbents like Adobe are meeting users where they are and gradually infusing AI into existing creation tools and workflows. So even though that Firefly vision video looks dope, I’d wager it’ll be a long while until we see such an all-in-one tool from Adobe themselves.

Meanwhile I imagine the big tech players and premiere AI labs probably have all of these primitives sitting around in research and prod — but may not have an incentive to build an Adobe or Autodesk “killer.” Better to partner with the incumbents and upstarts alike and provide them the capabilities.

Given these dynamics, what should startups focus on to truly make an impact in this space? Is it as simple as “solving a problem” — or is a more holistic reimagining necessary to see a real disruption to the content creation ecosystem?

🔗 Read and share your thoughts on Twitter/X

🎥 Creation Corner:

1. 3D Tools & Generative AI — A Match Made In Heaven - The future of creation is hybrid - have an underlying 3D representation you can exert fine grain control over; then use generative AI to take it all the way.

Kitbashing 3D scenes together, and reskinning them with generative AI is fun 🪄

- Top: Output from Gen-1 (composited in AE)

- Bottom: Input from Maya viewportSuch an AI workflow is worth trying regardless of which 3D tool you use; you can even stage and capture your input in… twitter.com/i/web/status/1…

2. The Future Of 3D Rendering Is Generative - intermediate 3D representations enable controllable AI content.

I continue to be bullish on intermediate 3D representations to make controllable AI content.

Greybox and kitbash the world you actually want, and use generative AI to take it all the way.

The future is hybrid, and @Yokohara_h illustrates this well:

3. Relighting Video In Real Time - Immense potential for virtual sets and visual effects. Future tests forthcoming that rely on neural rendering to emulate advanced light transport effects like sub-surface scattering.

The quality of relighting you can do with AI is pretty amazing. So much potential for virtual sets and visual effects.

Segmented the video and inferred PBR textures locally using SwitchLight Studio (on an NVIDIA RTX 6000 Ada GPU).

More videos on this awesome technique soon.

🌱 Long-Form Deep Dives:

🎙️ Podcast: I had an opportunity to be invited on the Open Metaverse Podcast hosted the talent duo of Marc Petit (Epic Games) and Patrick Cozzi (Cesium). Long time listener — first time dialer 🙂 Their research was impressive and made for a fun convo ranging from AR/VR and Geospatial 3D to Generative AI and the Creatorprenuer life. Check it out on your favorite podcast platform by clicking the link below.

Bilawal Sidhu: A Mind Living at the Intersection of Bits and Atoms - Building the Open Metaverse

💌 Stay in Touch:

Got feedback, questions, or just want to chat? Reply to this email or catch me on social media.

P.S. Take a moment to tell me if you like this new format